Why Your Competitors Dominate AI Search Results, and You Don’t

If you work in SEO, content, or digital strategy today, you have probably done this yourself.

You open ChatGPT, Perplexity, or Google’s AI mode. You search for your category. Your competitors are mentioned. Your brand is missing.

That moment is frustrating, but it can also be misleading.

AI search is not broken, and it is not random. In most cases, brands lose visibility in AI answers for very specific, measurable reasons. The problem is that most teams are still trying to evaluate AI search with old SEO instincts.

In this article, I will walk you through why competitors dominate AI answers, how to properly analyze AI visibility, and how to determine whether AI search optimization is worth investing in. Along the way, I will use concrete examples from a brand visibility campaign I created for Lululemon to illustrate what these patterns look like in practice.

AI visibility is not the same as SEO rankings

The first mistake most brands make is assuming that AI search behaves like Google rankings. It does not.

AI platforms do not rank pages in a list. They generate answers by synthesizing information from multiple sources, prompts, and brand associations. Visibility in AI search is therefore about presence and influence, not position.

This aligns with recent deep dives into how large language models retrieve information. As detailed in David McSweeney’s breakdown of how ChatGPT works, AI systems rely heavily on retrieval pipelines that pull from a limited, curated set of web sources before generating an answer. If your brand is not part of that retrieval set, it effectively does not exist for that prompt, regardless of how strong your SEO performance might be.

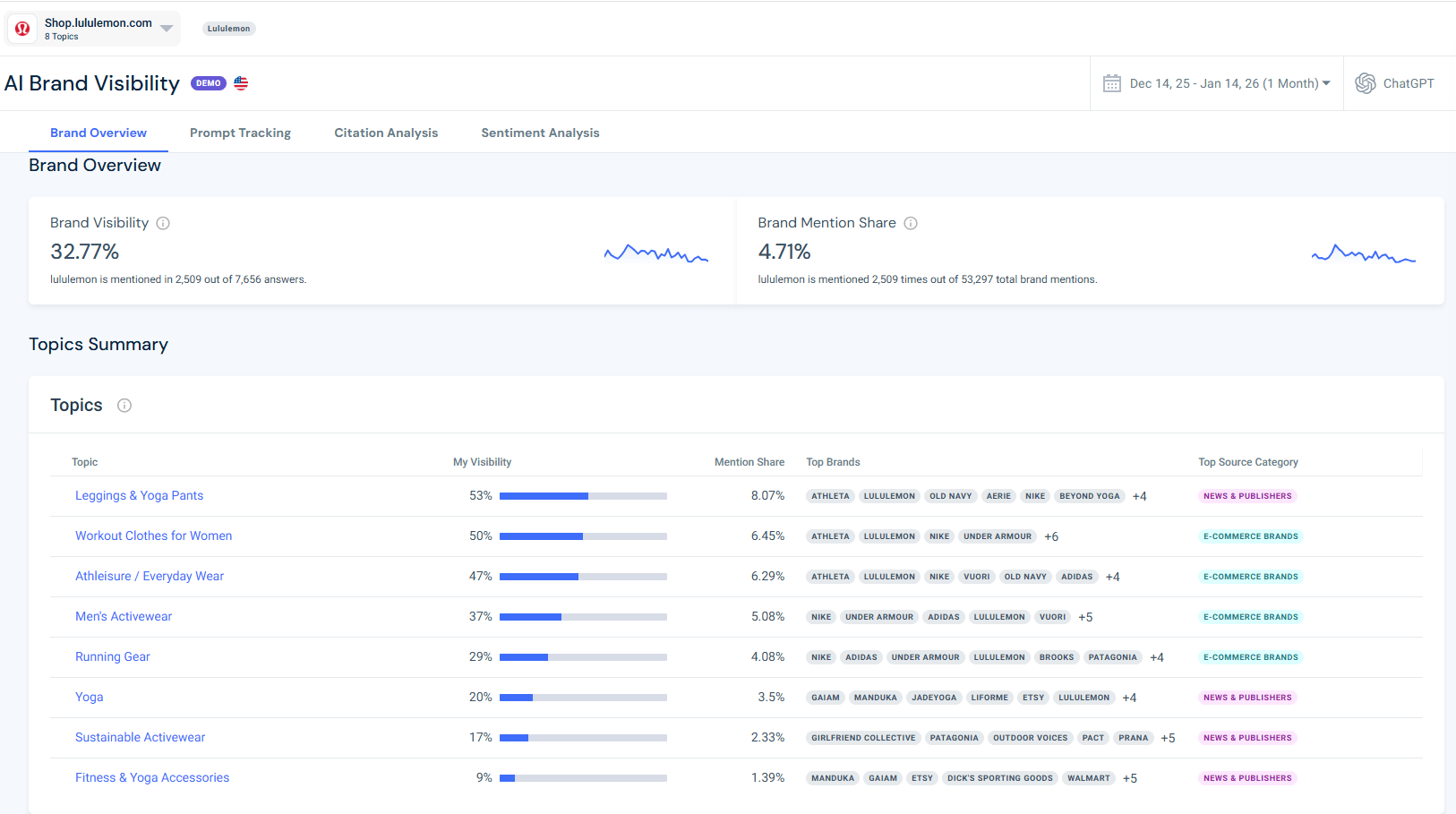

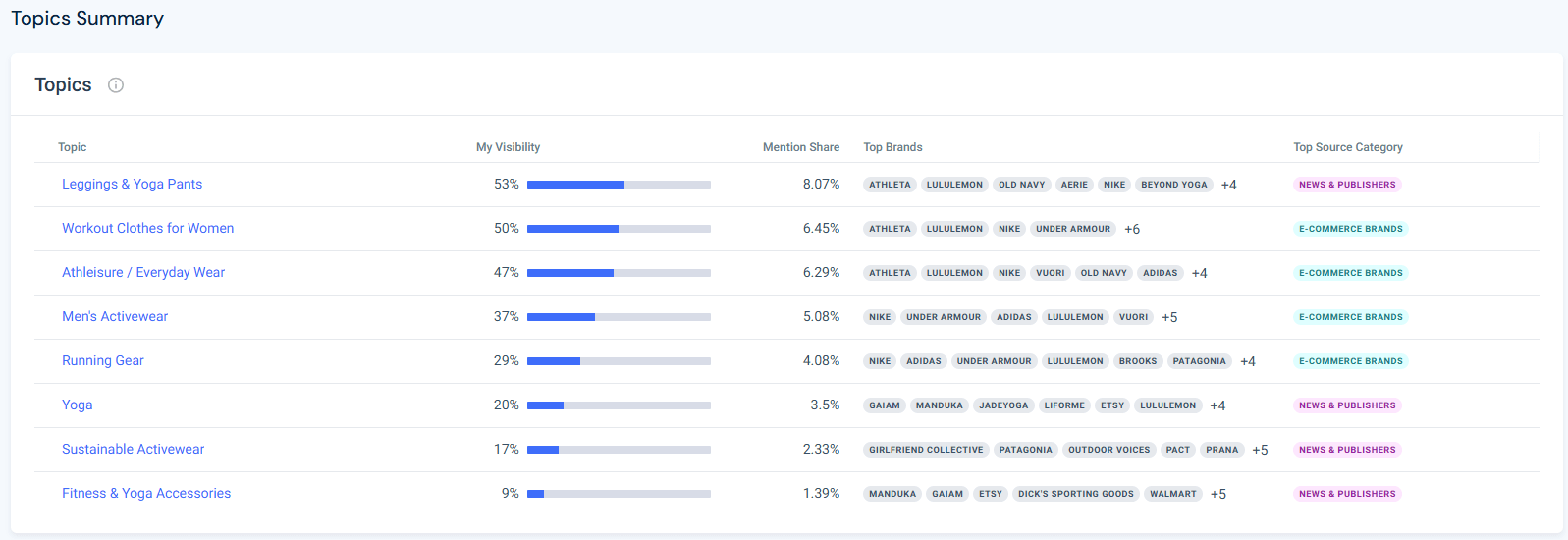

Using Similarweb’s Gen AI tools, specifically the AI Brand Visibility tool, I ran a campaign for Lululemon and found that the brand appeared in 32.77% of AI-generated answers across tracked topics. At the same time, its brand mention share was only 4.71% of all brand mentions across those answers.

That gap is important. It shows that a brand can appear regularly, yet still be overshadowed by competitors that are mentioned more often or across a wider range of prompts.

This is why manually testing a handful of prompts almost always leads teams to the wrong conclusion.

Why most teams feel blind when comparing AI visibility to competitors

One of the biggest frustrations teams face with AI search is not just low visibility, but the lack of any clear way to benchmark where they stand.

Most brands have no reliable answer to basic questions like:

- How often does our brand appear in AI answers compared to competitors?

- Which competitors dominate specific topics or use cases?

- Are we missing entirely, or just losing share of voice?

Without data, teams default to anecdotal testing. They run a few prompts, see familiar competitors, and assume they are losing everywhere.

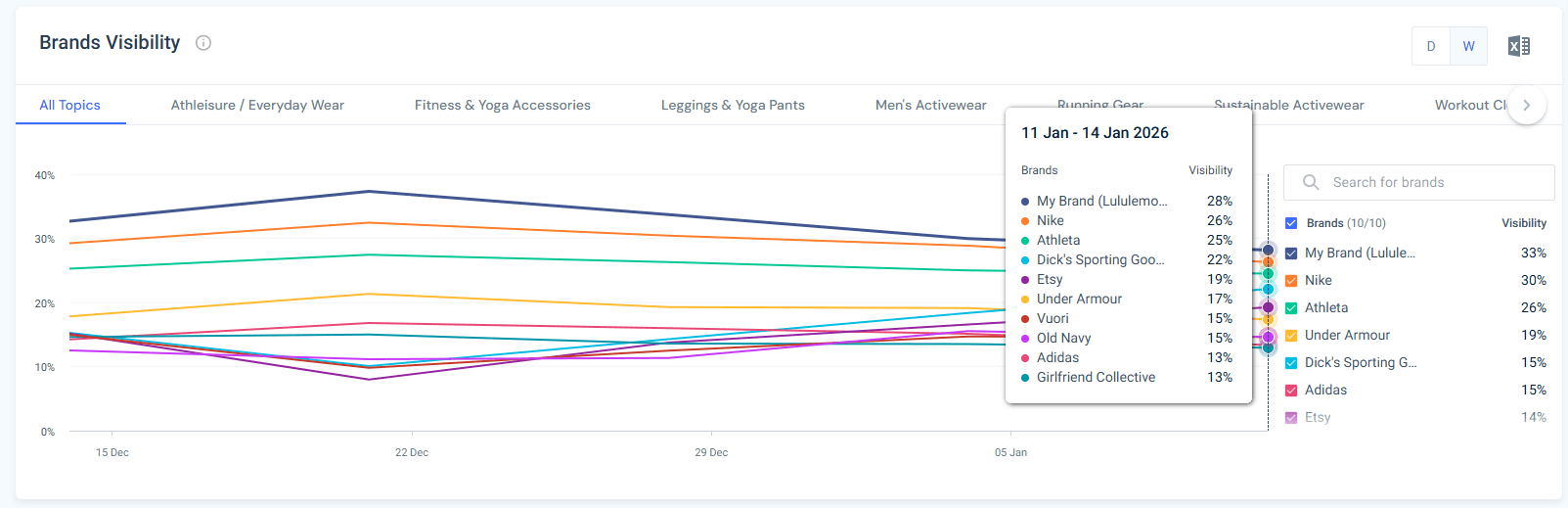

Using Similarweb’s AI Brand Visibility tool makes this comparison explicit. Instead of relying on isolated answers, you can see visibility by topic, compare it directly against competitors, and track how those relationships change over time.

In the Lululemon campaign, this distinction was critical. At a glance, the brand appeared to be present across AI answers. But competitor benchmarking revealed where Lululemon was clearly leading and where it was falling behind, particularly in sustainability-related topics.

This kind of clarity is what turns AI search from a source of frustration into something you can actually analyze and manage.

The three reasons competitors dominate AI answers

When you look at competitive AI analysis across categories, the same three issues appear repeatedly.

1. Prompt mismatch: users ask questions you don’t answer

Users do not interact with AI the same way they use traditional search engines. A single query often triggers a query fan out, where the system explores many related intents behind the scenes.

In the Lululemon campaign, the brand performed well for broad prompts tied to core categories like athleisure, leggings, and workout clothing.

However, it consistently disappeared from more specific prompts focused on attributes such as sustainability, inclusivity, accessories, and secondary use cases.

Competitors that appeared in those answers were not necessarily larger brands. They were brands that already had content and third-party mentions aligned with those specific intents.

If your content does not clearly address the questions users are asking, AI will not infer the connection on your behalf.

2. Source gap: AI relies on sites you don’t appear on

AI answers are shaped by a relatively small set of trusted sources. These typically include:

- Major publishers and media sites

- Health, fitness, or industry authorities

- Review platforms and curated comparison articles

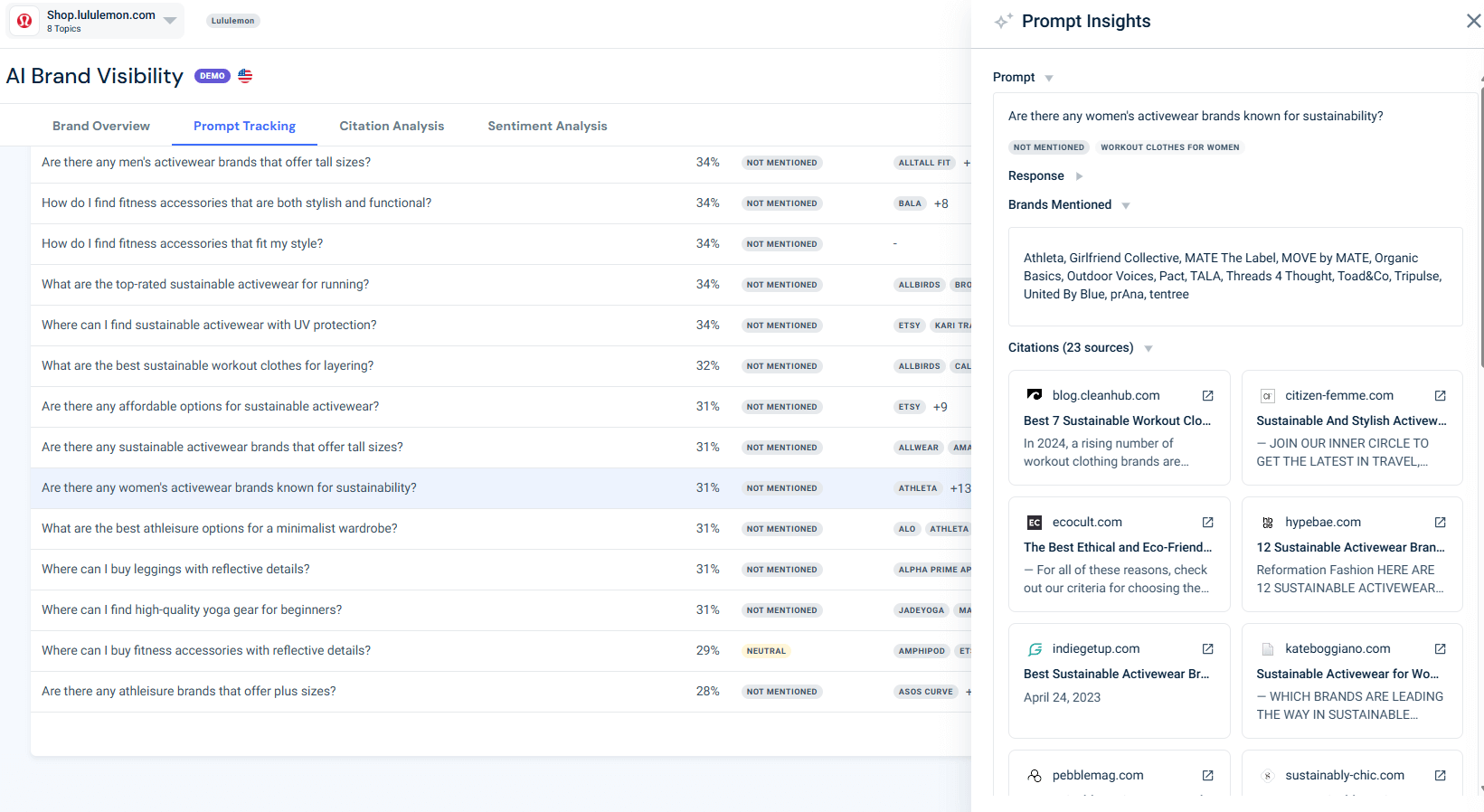

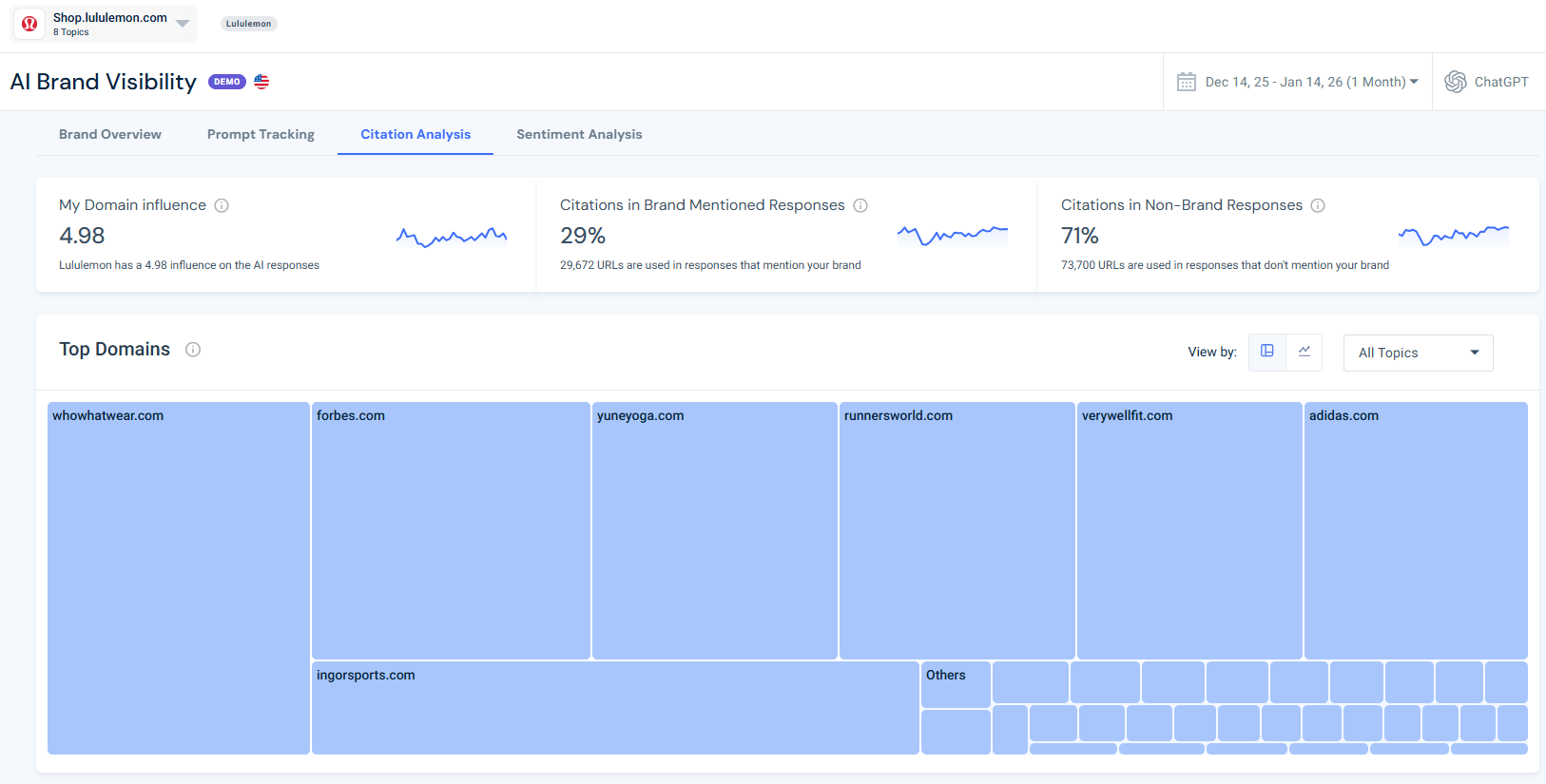

When I used Similarweb’s AI Citation Analysis tool in the Lululemon campaign, it became clear that competitors were being reinforced by these sources far more often in certain topics. As a result, AI systems repeatedly surfaced those competitors when answering related prompts.

This is the core idea behind performing AI citation analysis and AI citation gap analysis. If the sources shaping answers do not mention you, the answers will not mention you either.

3. Entity and authority gaps

AI systems rely on repeated signals to associate brands with categories and attributes. Even well-known brands can lose visibility when those associations are not reinforced consistently.

In the Lululemon example, based on the topic-level AI visibility data, visibility was strong in core apparel topics but dropped sharply in areas like sustainability and fitness accessories. That does not mean the brand is irrelevant in those areas. It means AI does not consistently see evidence connecting the brand to those attributes.

This is where analyzing competitors’ AI brand visibility becomes essential.

How to track AI visibility properly

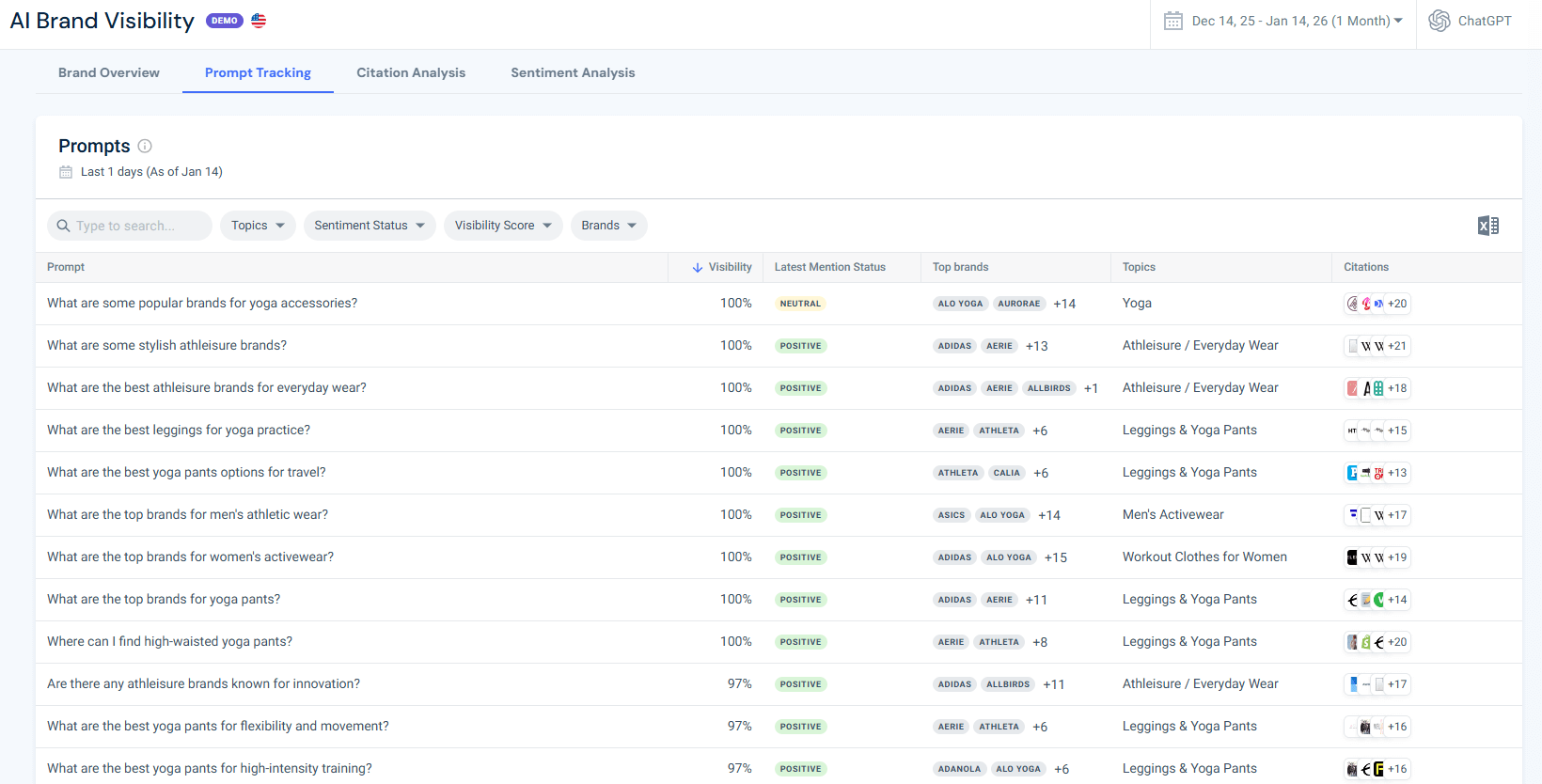

Spot-checking AI answers does not scale. To understand what is actually happening, you need a systematic approach.

A proper AI visibility analysis should focus on topics rather than individual keywords, and should answer questions such as:

- How often does my brand appear across AI answers for key topics?

- How does that visibility change over time?

- How does my visibility compare to direct competitors?

Using Similarweb’s AI Brand Visibility topic analysis, the Lululemon campaign showed strong visibility in leggings and athleisure, moderate visibility in running gear, and very low visibility in sustainability-related topics. Without this breakdown, it would be impossible to prioritize where to act.

This is what most teams mean when they talk about GEO KPIs. Visibility by topic, share of mentions, and influence trends are far more actionable than trying to optimize for a single AI answer.

Why do some domains shape AI answers more than others

One of the most important insights from AI citation analysis is how concentrated influence really is.

Using Similarweb’s AI Citation Analysis tool, I found that across multiple topics, the same types of domains appear again and again as sources. Large publishers, health and fitness authorities, and niche comparison sites often carry disproportionately high influence scores.

This mirrors what other research has observed about AI search systems. As outlined in industry analyses of how AI search engines decide which brands get seen, generative platforms tend to favor sources that are already well-established, frequently referenced, and easy to summarize. Authority, repetition, and clarity matter more than perfect on-page optimization.

In the example campaign, sustainability-focused prompts repeatedly cited fashion publishers and curated listicles. Brands that appeared on those sites benefited across dozens of AI answers without creating new content themselves.

This is why GEO and AEO are not just on-site exercises. Off-site visibility plays a critical role in how AI systems decide which brands to feature.

Prompt intent matters more than most teams expect

Another pattern that stood out in the analysis was how dramatically outcomes changed based on intent.

Broad prompts like “best athleisure brands” tended to surface familiar names. But as soon as prompts became more specific, asking about sustainability, sizing, pricing, or accessories, the answers changed completely.

Analyzing prompt intent helps explain why teams often perceive AI search as inconsistent. In reality, AI responds very consistently to different intents.

Tracking prompts by topic and intent makes these gaps visible and actionable.

A practical AI visibility improvement plan

In my opinion, the fastest path to improving AI visibility is not publishing more content blindly. It is about closing specific, measurable gaps.

In practice, that means:

- Identifying which prompt clusters you are missing

- Understanding which sources decide the answers to those prompts

- Closing the gap with a combination of on-site and off-site actions

On-site actions: make your content easier for AI to cite

In the Lululemon campaign, sustainability-related topics showed very low visibility compared to core apparel categories. That kind of gap is a clear signal that AI does not have strong, easy-to-cite content connecting the brand to that attribute.

On-site actions that help close these gaps include:

- Dedicated sustainability pages that clearly explain materials, sourcing, and practices

- Certifications and standards pages that AI can reference directly

- Product attribute hubs covering topics like recycled materials, supply chain transparency, or ethical production

- Clear, non-promotional summaries and FAQs that are easy for AI systems to extract

The goal is not to convince AI. It is to make the relevant information explicit and accessible.

Off-site actions: strengthen presence where AI already looks

Citation analysis effectively hands you a target list.

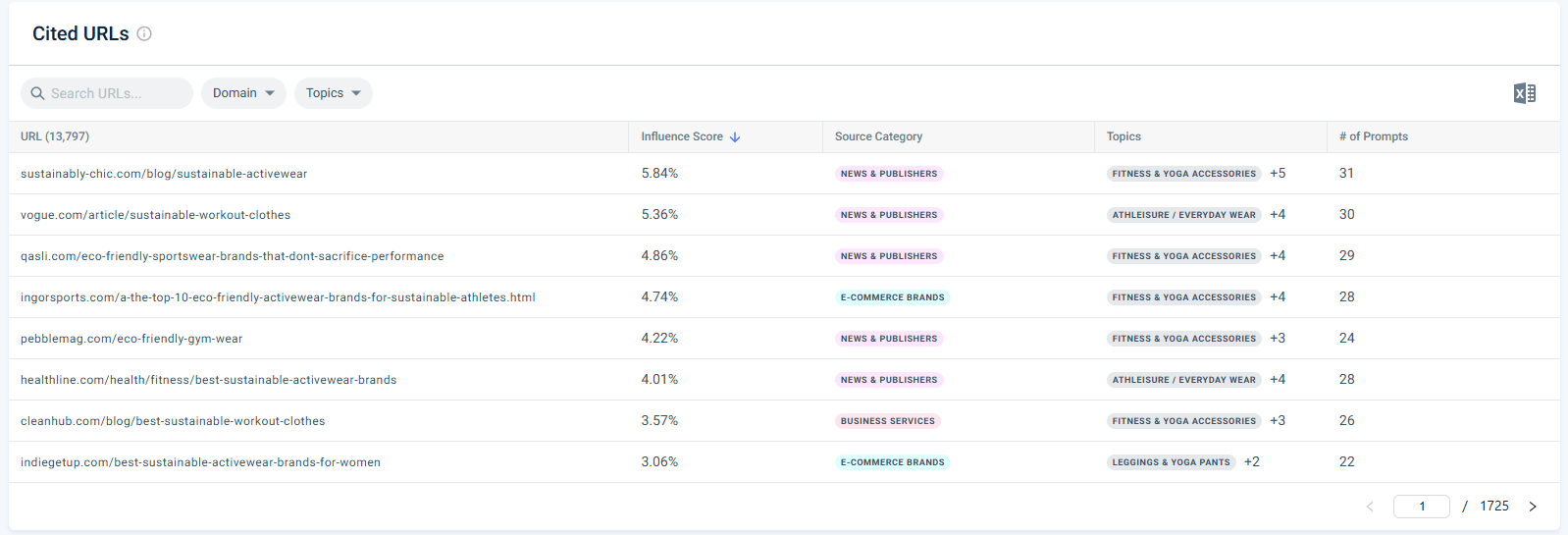

In the sustainability prompts from the campaign, the most influential cited URLs came from curated listicles and authority publishers, including pages like:

- sustainably-chic.com/blog/sustainable-activewear (Influence Score 5.84%, used in 31 prompts)

- vogue.com/article/sustainable-workout-clothes (5.36%, 30 prompts)

- healthline.com/best-sustainable-activewear-brands (4.01%, 28 prompts)

When AI repeatedly cites these types of pages, improving visibility is no longer just an SEO exercise. It becomes a digital PR, partnerships, and review ecosystem challenge.

Appearing on the sources that already shape AI answers often has more impact than publishing additional content on your own site.

Are competitors actually getting traffic from AI platforms?

Visibility is only half the story. The next question is whether AI mentions translate into real traffic.

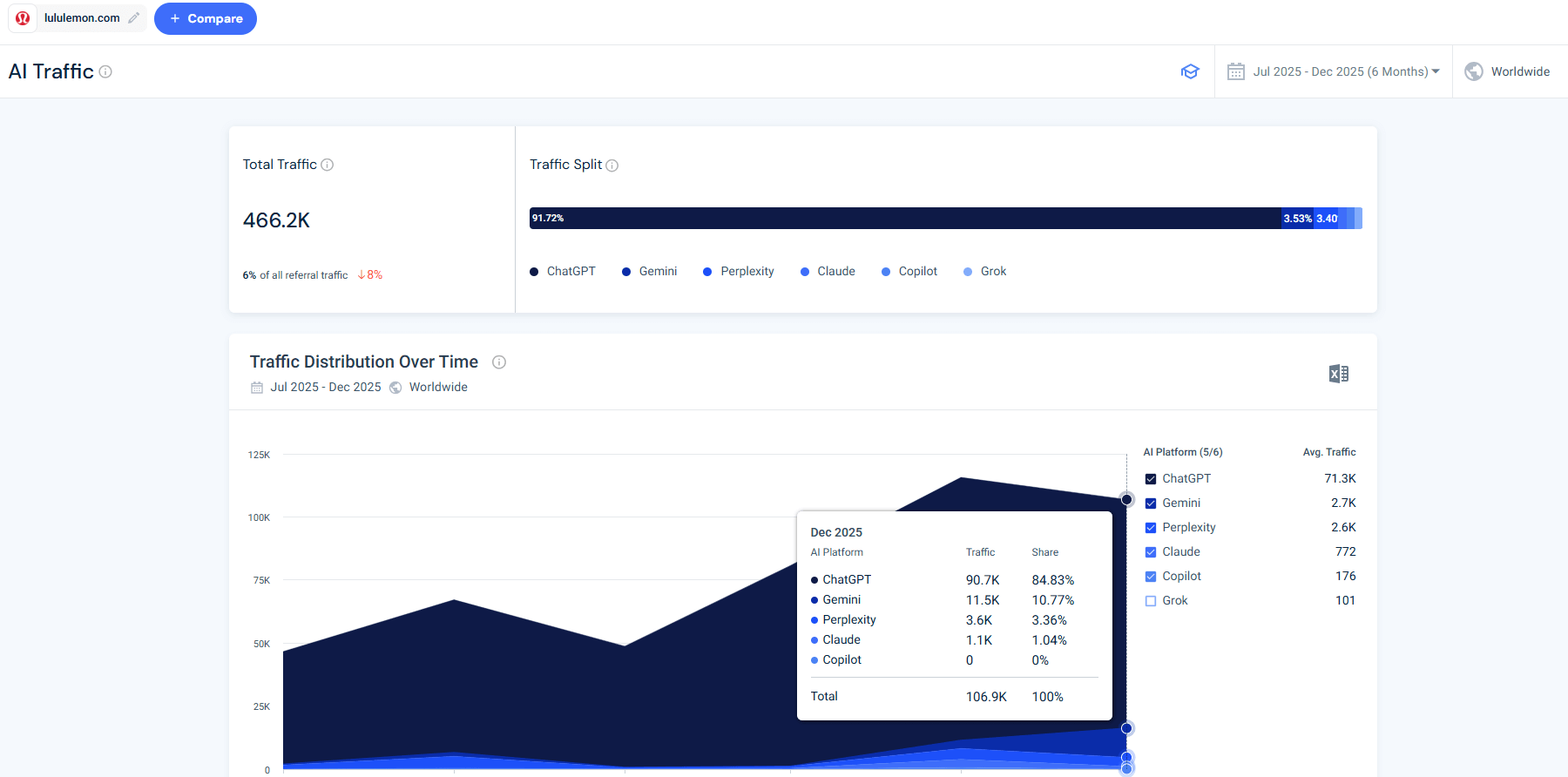

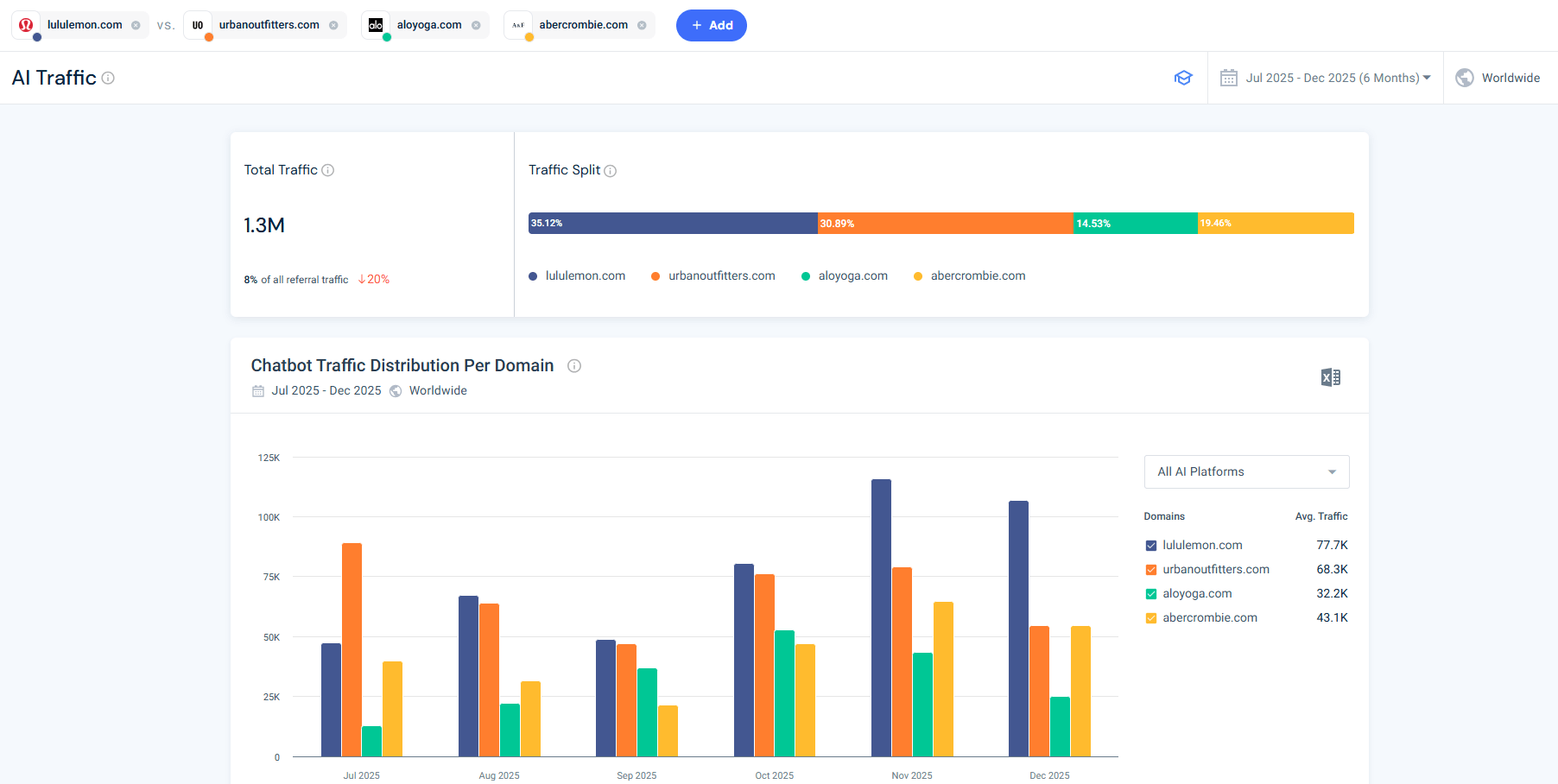

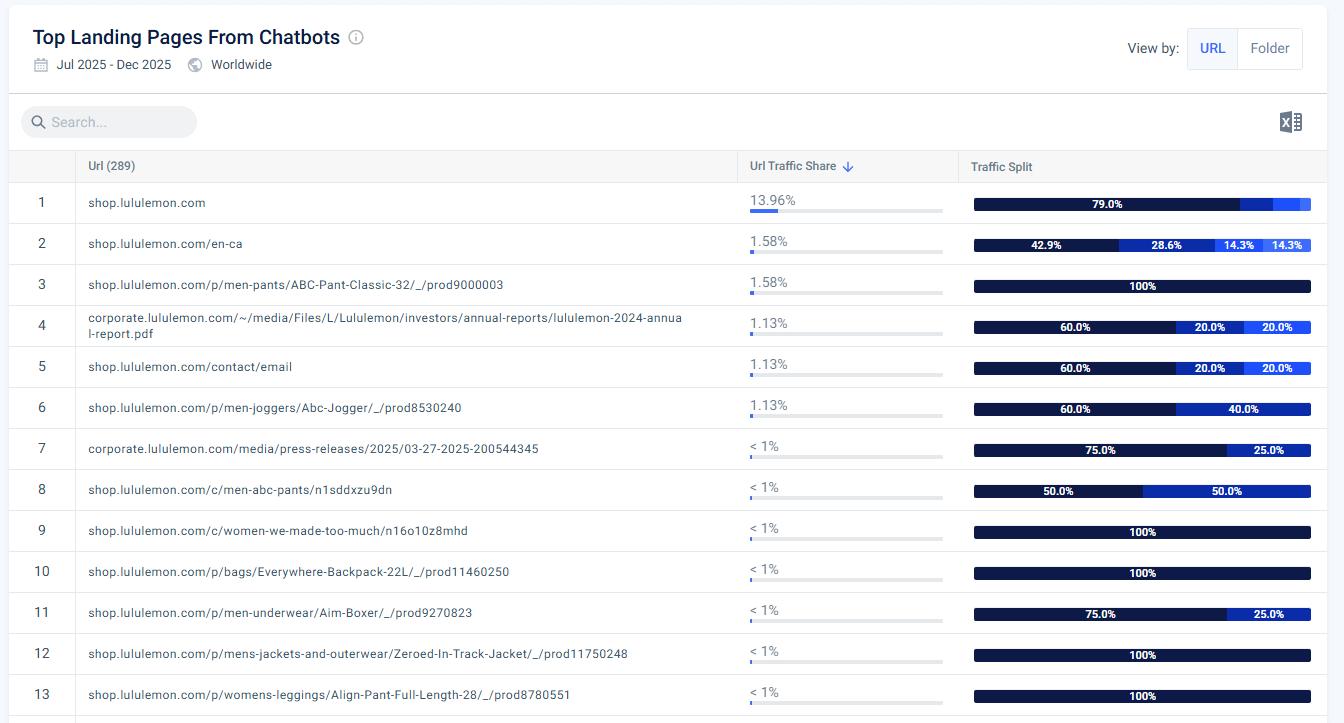

Using Similarweb’s AI Traffic tool, I reviewed how AI platforms drive visits over six months. Keeping the Lululemon example, AI platforms drove hundreds of thousands of visits, with ChatGPT responsible for the majority of that traffic.

When compared against competitors, differences emerged not only in total AI-driven traffic but also in which platforms contributed most. Some competitors showed stronger growth in specific months or benefited more from certain prompt types.

The landing pages receiving AI traffic were also revealing. Product pages, category hubs, press releases, and other clear, crawlable pages performed particularly well.

If competitors are already capturing meaningful traffic from AI platforms, ignoring AI visibility becomes a strategic risk rather than a conservative choice.

How to decide if AI visibility is worth investing in

Before changing budgets or priorities, most teams need clarity on whether AI search actually matters for their business today.

AI visibility is worth prioritizing if:

- Your category is researched conversationally, such as shopping, SaaS, finance, health, or education

- Competitors already dominate AI brand mentions for important prompts

- AI referral traffic is growing for your space

- You rely on consideration-stage discovery, not just branded demand

It is less urgent if:

- Your growth is driven almost entirely by brand demand

- AI answers rarely include links in your category

- Your audience does not actively use conversational search yet

The key is measurement, not assumptions.

Check out my data-driven guide about this – Should You Invest in AI Visibility? A Data-Led Perspective

How to approach AI visibility without burning your SEO budget

AI search optimization should not replace SEO. It should help you make better prioritization decisions.

In practice, this means using AI visibility data to decide where existing SEO and content efforts should be focused, not creating an entirely new motion.

That typically includes:

- Tracking AI brand visibility and influence as GEO KPIs alongside traditional SEO metrics

- Performing AI citation gap analysis before creating new content

- Using prompt intent analysis to guide which pages need updates or expansion

- Investing in off-site visibility only where AI clearly relies on external sources

- Letting AI traffic data inform where budget shifts are justified

Teams that succeed treat AI search as an input into SEO strategy, not a parallel strategy.

Learn more – How to Adapt Your SEO Budget for AI Search Optimization

What this means for GEO, AEO, and SEO strategy

The practical takeaway is that AI search does not require a new discipline or a separate motion.

Instead, GEO and AEO help clarify how discovery is changing and where existing SEO, content, and brand efforts should be focused.

For most brands, this means:

- Using AI visibility data to understand where competitors dominate specific topics and intents

- Prioritizing prompts and themes that influence consideration, not just awareness

- Strengthening presence in the sources AI consistently relies on

- Treating AI search as another signal layer alongside traditional organic data

Approached this way, AI search becomes an extension of the search strategy rather than something that competes with it.

Final thoughts

AI search can feel opaque when you only experience it through individual answers. But once you step back and look at the data, clear patterns start to emerge.

AI platforms operate on a different set of signals than traditional search. They reward brands that are easy to understand, easy to reference, and repeatedly reinforced across the web.

When competitors dominate AI answers, it is rarely because they discovered a hidden trick. More often, it is because they consistently:

- Cover the prompts users actually ask, not just the obvious head terms

- Appear in the sources AI relies on to construct answers

- Are clearly associated with specific topics, use cases, and attributes

When you analyze AI visibility through that lens, it stops feeling unpredictable. It becomes another surface you can measure, prioritize, and improve with intent.

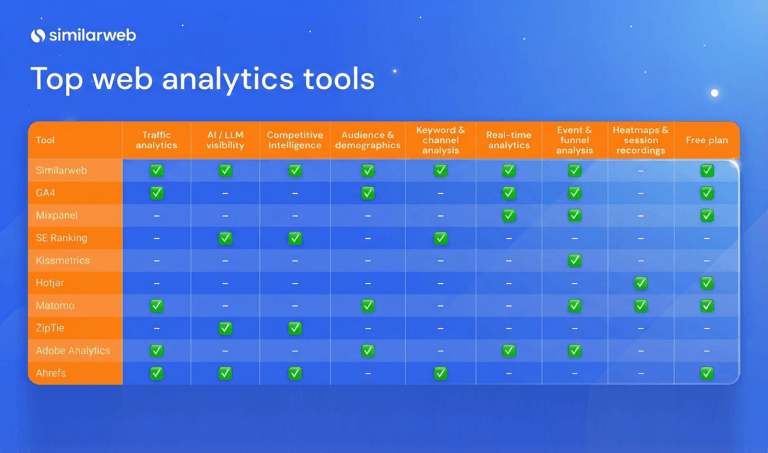

If you want to do that in practice, the starting point is having the right data. Tools like Similarweb’s Gen AI tools make it possible to see how your brand and competitors actually perform across AI platforms, which prompts and sources shape the answers, and whether that visibility turns into real traffic. Without that level of visibility, AI search remains guesswork.

FAQ

Why do my competitors show up in AI answers even when I rank higher in Google?

AI platforms do not rank pages the way Google does. They generate answers based on prompts, citations, and repeated brand associations across trusted sources. A competitor can rank lower organically but still appear more often in AI answers if they are mentioned more frequently in the sources AI relies on.

What should I look at first when my brand is missing from AI answers?

Start by analyzing prompt coverage. Look at which prompts within your key topics mention competitors but not you. Then review citation data to see which domains shape those answers. Missing visibility is usually a prompt mismatch or a source gap, not a technical SEO issue.

Is optimizing my own website enough to improve AI visibility?

Not always. On-site content matters, but AI visibility is heavily influenced by off-site signals. If publishers, review sites, or comparison pages dominate citations in your category, appearing on those sources can have more impact than publishing additional pages on your own site.

How do I know which prompts actually matter for my business?

Focus on prompts tied to consideration and comparison. These often include questions about alternatives, pricing, use cases, sustainability, integrations, or specific attributes. Tracking prompt intent by topic helps separate high-impact prompts from generic ones.

What is the difference between AI visibility and AI traffic?

AI visibility measures how often your brand appears in AI-generated answers. AI traffic measures whether those answers drive users to your site. Both are important. Visibility shows influence, while traffic shows commercial impact.

How often should AI visibility be reviewed?

AI visibility should be monitored regularly, just like organic performance. Monthly reviews are usually enough to spot trends, shifts in competitor dominance, and changes in the sources AI relies on.

Does this apply only to ChatGPT?

No. While ChatGPT currently drives the most AI referral traffic, other platforms like Perplexity, Gemini, and Copilot use similar retrieval and citation patterns. The same visibility principles apply across platforms.

Wondering what Similarweb can do for your business?

Give it a try or talk to our insights team — don’t worry, it’s free!

![GEO Framework For Growth Leaders [+Free Template]](https://www.similarweb.com/blog/wp-content/uploads/2026/02/attachment-growth-leader-geo-decision-framework-768x429.png)